Azure Data Factory Training in Bangalore

with

100% Placement Assistance

- Job oriented Training

- Expert Trainers with 15+ years Exp

- Interview Questions

Azure Data Factory Training in Bangalore - New Batch Details

| Trainer Name | Mr. Hemanth |

| Trainer Experience | 15+ Years |

| Next Batch Date | 22-02-2023 (8:00AM IST) |

| Training Modes: | Classroom Training, Online Training (Instructor Led) |

| Course Duration: | 45 Days |

| Call us at: | +91 87223 55666 |

| Email Us at: | dturtleacademy@gmail.com |

| Demo Class Details: | ENROLL FOR A FREE DEMO CLASS |

Azure Data Factory Training in Bangalore - Curriculum

Difference between ADF version 1 and Version 2.

- DataFlows and Power Query are new features in Version 2

- DataFlows are for data transformations

- PowerQuery is for Data preparations and Data Wrangling Activities.

- Building blocks of ADF

- -> PipeLine

- -> Activities

- -> Datasets

- -> Linked Service

- -> Integration RunTime

* Auto Integration Runtime

* Self Hosted Integration runtime

* SSIS Integration Runtime.

- More on Technical differences between ADF version 1 and Version 2 – Part 1

- More on Technical differences between ADF version 1 and Version 2 – Part 2

Introduction to Azure Subscriptions

Types of Subscriptions

-> Free Trial

-> Pay-AS-You-Go

- Why Multiple subscriptions are required?

- What are resources and Resource Groups?

- Resource Group Advantages

- Why Multiple resource Groups need to be created?

- What are regions

- Region Advantages.

- Create Storage Account with Blob Storage feature

- Converting Blob storage feature as DataLake Gen2 feature

- Create Storage Account with Azure Data Lake Gen2 features.

- How to Enable Hierarchical name space.

- Creating Containers

- Creating sub directories in the Container of Blob Storage.

- Creating sub directories in Container of DataLake Gen2 Storage

- Uploading local files into Container/sub directories.

- When is ADF required?

- Create Azure SQL and Play with Azure Sql – Part 1

- Azure sql as OLTP

- Create Azure Sql Database

- Create Azure Sql Server

- Assign Username and Password for Authentication

- Launching Query Editor

- Adding Client IP address to FireWall Rule settings

- Create table

- Insert rows into table

- Default schema in azure sql

- Create schema

- Create table in user created schema

- Loading query data into to table

- Information_schema.tables

- Fetching all tables and views from database

- Columns of Information_schema.tables

-> TABLE_CATALOG

-> TABLE_SCHEMA

-> TABLE_NAME

-> TABLE_TYPE

● Fetching Only tables from database

- How to create Linked Service for Azure data lake

- Possible errors while creating linked service for Azure datalake account.

- How to solve errors for Linked Service for Azure data Lake

- Two ways to Solve Linked Service Connection Error.

-> Enable Heirarchical namespace for Storage Account

-> Disable Soft Delete options BLOB and CONTAINER

- How to create datasets for Azure data lake file and containers

- Your First ADF pipeline for Datalake to data lake file loading

- COPY DATA activity used in pipeline

- Configuring Source dataset for Copy Data Activity

- Configuring Sink dataset for Copy Data Activity

- Run the pipeline:

- Two ways to Run a Pipeline.

-> Debug mode

-> Trigger mode

- Two Options in Trigger mode

-> On demand

-> Scheduling

- How to load data from Azure data lake to Azure sql table.

- Create linked service for Azure sql Database

- Error resolving , while creating Linked service for Azure Sql Database

- Create a dataset for Azure Sql Table.

- Create a pipeline to load data from Azure data lake to Azure sql Table

- Helped activities

- Copy activity

If data lake file schema, and azure sql table schema are different, How to load Using Copy Data Activity.

- Perform ETL With “Copy Data” Activity

- Copy Data Activity With “Query” Option

- Loading Selected Columns and Matching Rows with Given Condition , From Azure Sql Table to Azure Data Lake

- Creating new fields based on existed Columns of table and Load into Azure Data Lake

- Problem Statement : A file has n fields in the header , but data has n+1 field values . How to Solve this problem in Copy Data Activity

- Solution to Above Problem Statement. Practical implementation.

- Get Metadata – part 1

- Get Metadata Field List Options for Folder

- “Exists” Option of Field List

- Data type of Exists field in “Get Metadata” output as Boolean(true/false)

- “Item Name” Option of Field List

- “Item Type “ Option of Field List

- “Last Modified” Option of Field List

- “Child Items” Option of Field List

- Data Type of “child Items” field in Json Output.

- What is each element of “Child Items” called?

- Data type of item of “ChildItems” Field

What are subfields of each item in “childItems” Field

- Get Metadata Activity – Part 2

- If Input dataset is file, what are Options of “Field List” of Get Metadata.

- How to Get the number of Columns in a file?

- How do you make sure the given file exists ?

- How to get the file name, which is configured for the dataset.?

- How to get a dataset back end data object type(file or folder) ?

- How to Know when a file was last modified?

- How to get File size ?

- How to get File structure(schema)?

- How to get all above information of a file/folder/table with a single pipeline run

- If the input dataset is an RDBMS table, what are options of “Field List” of Get Metadata Activity.?

- How to get the number of columns of a table ?

- How to make sure the table exists in the database ?

- How to get the structure of a table ?

- In this Session, you will learn answers for all above questions practically.

- Introduction to “Get Metadata” activity.

- How to fetch File System information Using “ Get metadata” Activity.

- How “Get metadata” activity writes output in “JSON” format.

- How to Configure Input dataset for “Get Metadata” activity.

- What is the “Field List” for “Get Metadata” activity?

- Small introduction to “Field List” options.

- Importance of “Child Items” option of “Field List” in “Get Metadata” activity.

- How to Check and understand output of “Get Metadata” activity.

- “childItems” field as JSON output of “Get Metadata”.

- “childItems” data type as Collection as data type As Array.

- What is each element of “childItems” output

- What is the “exists” option in “Field List”.

- Introduction to “Filter” activity.

- Problem statement: in a container of a Azure Datalake storage account , there are 100s of files, some files related with adf , and some files related with employees, and some files related with sales and some files related with others like log.

- How to fetch only required files from Output of “get metadata” activity ?

- How to place “Filter” activity in the pipeline.

- How to Connect “Get metadata” activity and “Filter” activity.

- What happens , If we don’t connect two activities?(bcoz, this is the first scenario with multiple activities in a single pipeline).

- How to pass output of “Get metadata” Activity to “Filter Activity”

- “Items” field of “Filter” Activity.

- What is the “@activity()” function and “@activity().output”.

- Above Output produces a lot of fields , How to take specific fields as input to filter activity.

Example : @item().name, @item().type

@startswith() function exampleHow to check output of “Filter” activity

What is the Field name of “Filter” activity , in which required information is available.

@not() function example

- @item() output as nested json record. How to access each field. Example, @item().name, @item().type

get metadata + filter activities and how to apply single condition how to apply multiple conditions all the things

there are functions

Functions

@equals

@greater( )

@greaterOrEquals( )

@or (C1,C2 …….)

@and (C1,C2 …..)

@not (equals( ))

Task : Get Metadata – fetch only files , and reject folders of Given Container with

“Get Metadata” and “Filter” Activity.

steps to achieve Above Task.

Step1: Create a Pipeline and Drag Get MetaData Activity

Step2 : Configure Input DataSet of Container

Step3: Add a Field List With “child Items” Option.

Step4: Add “Filter” activity to “Get Metadata”

- Step5: Configure “Items” Field , Which is input for “Filter” activity From Output of “Get Metadata”

- Step6: Configure Filter Condition to take only Files.

- Step7: Run the Pipeline

- Step8: Understand Output of “Filter” Activity.

- In which field of Json, Filter output is available ?

- What is data type of Filtered output

- In this task you will work with below ADF expressions

- @ activity() Function ..

- @ activity(‘Get Metadata1’).output

- @ activity(‘Get Metadata1).output.childItems

- @ item().type

- @ equals(item().type, ‘File’)

- After Completion of this session you will be able to implement all above steps , and can know answers for above questions , and able to use adf expressions practically.

Scenario : with GetMetadata and Filter Activities – Part 1

Scenario : with GetMetadata and Filter Activities – Part 2

Task : bulk load of files one storage account to another storage account (from one container to other container )

Helped activities

-> get metadata

-> foreach

-> copy data

Bulk load of files from one storage account to Other storage account with “Wild card “ option.

Bulk load of files from one storage account to Other Storage account with “Get Metadata” and “ForEach” and “Copy Data” Activities.

Data Set Parameterization

Bulk load of files into Other Storage Account with “Parameterization”

copy only files which starts emp into target container Using Get MetaData , Filter , ForEach, Copy Data Activities – [ in target container, file name should be same as source ]

load multiple files into the table.(Azure Sql)

- Helped activities

○ get metadata + filter + foreach + copy data activities

Conditional split.

Conditionally distributing files (data) into different targets (sinks) two ways.

○ using filter

○ using if condition activity

○ Conditional Split implementation with “Filter” activity

○ Problem with Filter , explained.

- Conditional split with “if condition”.

- Helped activities

○ get metadata + filter + for each + if condition

- Lookup activity

- migrate all tables of the database into a data lake. with a single pipeline.

- Helped activities

- lookup activity

for each activity

COPY DATA ACTIVITY

WITH PARAMETERIZATION

- Data Flows Introduction.

- ELT (Extract Load and Transform)

- Two DataFlows in ADF

- Dataflow activity in pipeline.

- Mapping Data Flows

- Configuring Mapping Dataflows as Data Flow Activity in PipeLine

- Introduction to Transformations

- Source

- Sink

- Union

- Filter

- Select

- Derived Column

- Join etc.

- Difference between source , sink of “Copy Data” activity And source , sink of “Mapping Data Flows”.

- Source as (data lake) Sink as (SQL table) ——-> by using dataflow source and sink transformation

Extract data from RDBMS to Datalake ———–> Apply Filter ——> sink

Assignment1

○ SQL Table to Datalake

○ Helped Transformation

(Souce)

Sink

(Dataflow Activity)

- Filter transformations

- Helped Transformation

(Source)

Filter

Sink

(Dataflow Activity)

● Select transformations

1. Rename columns

2. Drop columns

3. Reoder the columns

● Helped Transformation

(Source )

Select

Sink

(Dataflow Activity)

● Derived column Transformation Part1

1.You can generate new column with given expression

2.You can update existed column values

● Helped Transformation

Derived column

Select

Sink

(Dataflow Activity)

● How to clean/handle null values in data.

● why should we clean nulls

1.computational errors

2.data loading errors into target table synapse table.

● Cleaning names(cleaning means not always replace nulls with some constant value, cleaning means transform data according to business tool).formating of the data (names)

● Helped transformation

Derived column

Generate new columns,with conditional transformation.

two options:

1.iif() —->nested ifs,

2.case()

● Helped transformation

Derived column

ADF Session 42:

● Conditional transformation with case() function.

emp—->derived columns—–>select—–sink

● Helped transformation

derived column

select

sink

(Dataflow activity)

● Union transformation part 1.

● Merging Two different files with the same schema by using two sources using the union transformation, And writes output into a single file.

● Helped transformation

Two sources

Union

Sink

(Dataflow activity)

Merging three different files with different schemas by using derived column transformation, select transformation and union transformation, finally we get a single file with a common schema.

Helped transformation Derived column

union

select

(source)

sink

(Dataflow activity)

● Two different files by using derived column transformation,

union transformation and aggregate transformation, to get

branch1 total and branch 2 total.

● Helped transformations

(Source)

Derived column

Union

Aggregate

*(After this Watch session 70, for more on Unions).

● Joins transformations 5 types

1.Inner join

2.Left outer join

3.Right outer join

4.Full outer join

(5.Cross jons)

● Helped transformation

Joins

Select

Sink

(Dataflow activity)

Full Outer Join Bug fix

How to join more than two datasets (example 3 datasets).

● Inter linked scenario related to 25th session.

treat, dept as project

task is ” summary Report”

active employes (who already engaged into project -> 11,12,13 projects,-> these projects total salary budget (bench team 20,21 total salary ,bench project )

● Helped transformations

Joins

Select

Derived column

Aggregate

Sink

(Dataflow activity)

● To use full outer join advantage (complete information,no information missing)

task~1 : Monthly sales report by using derived column transformation, aggregate transformation.

● Helped transformation

Derived column

Aggregate

sink

● Task~2 : Quarterly sales report by using derived column transformation, aggregate transformation.

● Helped transformation

Derived column

Aggregate

● Task~4 : Comparing Quartely sales report: Comparing Current Quarter sales with its Previous Quarter Sales.

● Helped transformations

Source

Join

Select

Derived column

- A real time scenario on Sales data Analytics – Part 1

A real time Scenario on Sales data Analytics – Part 2

● More on Aggregation Transformation.

● Configuration Required for Aggregation Transformation

● Entire Column aggregations.

● Entire Column Multiple Aggregations.

-> Sum()

-> Count()

-> max()

-> min()

-> avg()

● Single grouping and Single Aggregation

● Single Grouping and Multiple Aggregations

● Grouping by Multiple Columns with Single aggregation

● Grouping by Multiple Columns with Multiple Aggregations.

● Finding range Aggregation by adding “Derived Column” transformation to “Aggregation” Transformation.

● Conditional Split of data.

● Distributing data into multiple datasets based on given condition

● Split on options:

1. First Matching conditions

2. All Matching conditions

● When to use “First Matching Conditions” and When to use “All Matching Conditions”

● An Example.

● An Use Case on “First Matching Condition” option of “Conditional Split” Transformation in Mapping Data Flows with Sales data.

● An Use Case on “Matching All Conditions” option of “Conditional Split” Transformation in Mapping Data flows with Sales data.

● Conditional split with cross join transformation

by using matrimony example.

● Lookup with multiple datasets

● Helped transformations

Source

Lookup

Sink

(Dataflow activity)

● Lookup with more options

● products as primary stream

● transformation as lookup stream

● Helped transformations

Products

Lookup

● Helped transformation

(Customers)

Exists

● Helped transformation

(Source)

(Customers)

Exists

Sink

(Dataflow activity)

● Finding Common Records, Only Records available in first dataset, Only Records available in Second Dataset.

● All Records except Common records in First and 2nd Datasets in Single DataFlow

● How to capture data changes from source systems to Target Data warehouse Systems.

● Introduction to SCD (Slowly Changing Dimensions)

● What is SCD Type 0 and Its Limitation.

● What is Delta in Data of Source System.

● What is SCD Type 1 and Its Limitation

● What is SCD Type 2 and How it tracks History of specific attributes of source data.

● Problem with SCD Type 2

● Introduction to t SCD Type 3

● How It solves problem of SCD Type 2

● How SCD type 3 maintains recent History track

● Limitation of SCD Type 3.

● Introduction to SCD Type 4

● How SCD Type 4 will provide complete Solution to SCD type 2.

● (Remember no SCD 5)

● Introduction to SCD Type 6 and Its benefits.

● How data transformations are done in ADF1 (with out dataflows)

● Aggregate Transformation , Sort Transformation with following examples 1. Single grouping with single aggregation

2. Single Grouping with multiple aggregations

3. Multi Grouping with Multiple Aggregations.

4. Sort with single column

5. Sort with multiple columns.

● What is pivot transformation?

● Difference between Aggregate transformation and Pivot Transformation

● Implement pivot transformation in dataflow

● How to clean pivot output

● How to call multiple dataflows in a single pipeline.

● Why we used multiple dataflows in one single pipeline.

● Assignment on Join, aggregate, pivot transformations.

● Finding Occupancy based on salary.

● Unpivot transformation.

● What is should be the input for Unpivot (pivoted output file).

● 3 configurations :

-> Ungrouping column

-> Unpivoted column. (column names to as column values)

→ aggregated column expression ( which row aggregated values to be turned as column values)

● Difference between output of “aggregate” and “unpivot” transformation.

● What additionally unpivot produces.

● Use case of Unpivot

● Surrogate Key transformation,

● Why we should use Surrogate key.

● Configuring Starting Integer Number for Surrogate Key

● Scenario : your bank is “ICICI”, for every record Unique CustomerKey should be generated as ICICI101 for the first customer, ICICI102 for 2nd customer . But the Surrogate key gives only integer value as 101 for first and 102 for 2nd. How to handle the given scenario , which is a combination of string and integer.

● Rank Transformation in dataflows – Part 1

● Why sorting data is required for Rank transformation

● Sorting options as “Ascending and Descending”

● When to use the “Ascending” option for Ranking.

● When to use the “Descending” option for Ranking.

● Dense Rank and How it Works

● Non Dense Rank (Normal Rank) and How it Works.

● Why we should not use “surrogate key” for Ranking.

● What is difference between Dense Rank and Non Dense Rank(Normal Rank)

● Limitations of Rank Transformation

● Implementing Custom Ranking With a Real time scenario

● Custom Ranking implementation with Sort, Surrogate key, new Branch , aggregate, Join transformations.

● Scenario :

-> problem statement: if a school has 100 students, one student got 90 marks , remaining all 99 students failed and scored 10 marks. The Rank transformation of ADF dataflows gives 1 rank for those who scored 90 , and 2 rank for failed students , who scored 10 marks. But the school management wanted to give a gift to the top 2 Rankers ( 2nd rankers failed and got equal least score , so that all students will get a gift). How to handle this scenario.

● Window transformation part 1

● Window transformation.

● Cumulative Average

● Cumulative Sum

● Cumulative Max

● Dense Rank for each partition.

● Making all rows as Single Window and apply Cumulative Aggregation.

● Parse transformation in Mapping DataFlows.

● How to handle and parse string collection ( delimited string values )

● How to handle and parse xml data.

● How to handle and parse json data

● Converting Complex Json nested structures into CSV/Text file.

● Complex data processing (transaformations).

● How parse nested Json records

● How to flatten array of values into multiple rows.

● If data has complex structures, what are supportive data store formats .

● How to write into json format.

● How to write into flatten file format( csv).

● Transformation used to process data.

1. Parse

2. Flatten

3. Derived Column

4. Select

5. Sink.

● Reading Json data (form vertical format)

● Reading a single Json record(document)

● Reading array of documents .

● Converting complex types into String using Strigify and derived column transformations.

● Used transformations in Data Flow.

-> source

->stringify

->derived column

Expression : toString(complexColumn)

→ select

-> sink.

● Assert Transformation

● Setting Validation Rules for Data.

● Types of Assert:

-> Expect True

-> Expect Unique

-> Expect Exists

● isError() function to validate a record as “Valid” or “Invalid”.

● Assert Transformation part 2.

● Assert Type “Expect Unique”

● Rule for Null values

● Rule for ID ranges.

● Rule for ID uniqueness

● hasError() function to identify which rule is failed.

● Difference Between isError() and hasError()

● Assert Transformation part 3.

● How and why to configure Additional streams

● Assert type “Expect Exists”

● How to validate a record reference available in any one of multiple additional streams. (A scenario implementation).

● How to Validate a record reference available in all multiple additional streams.(A scenario Implementation),

● Incremental data loading (part 3).

● Implementation of Incremental load(delta load) for multiple tables with a single pipeline.

- Error Handling and Validations

- Snowflake Pricing model and selecting best Edition and Calculation of Credits usage

- Resource Monitoring

- Data Masking

- Partitioning and Clustering in snowflake

- Materialized View and Normal View

- Integration with Python

- Integration with AWS, Azure, and Google Cloud

- Best Practices to follow

- SCD implementation using streams (CDC)

● AlterRow Transformation Part – 1

● Removing List of Records from Sink of a Specific criteria (Table)

● AlterRow Transformation part- 2

● Removing a given List of Records from Sink of no specific Criteria.

● AlterRow Transformation Part – 3.

● Update given List of Records in Sink.

● SCD (Slowly Changing Dimensions Type 1 ) Implementation.

● Alter Row Transformation with UpSert Action.

● A realtime Assignment (Assignment1 and Assignment 2) on SCD.

● Incremental Load and SCD combination

● How to implement SCD before “Alter Row” Transformation not introduced in DataFlows.

● How exists transformation helpful.

● How to implement SCD before ADF version 2 features of DataFlows.

● How Stored procedures helpful and implement SCD.

● Combining rows of multiple tables with different schemas with a single Union Transformation.

● Problem with Multiple Unions in DataFlow.

● Types of Sources and Sinks

● Source Types:

-> Dataset

-> Inline

● Sink Types:

-> Dataset

-> Inline

-> Cache

● When to use dataset and Inline

● Advantage of Cache as Reusage of output of Transfomation

● How to write output into Cache.

● How to Reuse the Cached output.

● Scenario : Generating Incremental IDs based on Maximum ID of Sink DataSet – Part 1

● Scenario: Generating Incremental IDs based on Maximum ID of Sink DataSet – Part 2

● Writing outputs into JSon format

● Load Azure SQL data into Json File.

● Cached Lookup in Sink Cache . Part 1

● Scenario :

Two Source Files, 1. Employee 2. Department

Common Column in both dno(department number) which is as Joining Column or Key Column.

● Task: Without using Join Transformation , Lookup Transformation , we need to join two datasets to increase performance using “Cache Lookup” of Cache Sink Type.

● Other alternatives of this Cached Lookup.

● How to configure Key Column for Cached Lookup

● How to access values of Lookup key in Expression Builder.

● lookup() function in Expressions

●sink#lookup(key).column

● Cached Lookup in Sink Cache – Part 2

● Below Scenario will teach you, how cached output is used by multiple transformations.

● Scenario:

Single Input file : Employee,

Two Transformations required.

1. Foreach employee , find his salary occupancy in his department

2. Foreach employee, find his average salary status in his department as “Above Average” or “Below Average”.

For these two transformations common input sum(), avg() aggregations with group by department number . This should be sent to Cache Sink as Cached Lookup.

Each transformation output should be in separate Output file.

● In above Dataflow, What flows are executed in parallel and What flows executed in sequence.

● How spark knows dependency between flows. [Using DAG

(Direct Acyclic Graph ) engine ]

● Scope of “Sink Cache” output. [ problem statement ]

● Using “ Multiple Cache Sink “ outputs in Single Transformation.

● Scenario:

Employee table has id, name, salary, gender, dno, dname, location columns with two records 101 and 102 ids.

New employees are placed in datalake file newdata.txt with name,salary, gender, dno fields.

Department name, department location fields are available in department.txt file of datalake.

Insert all new employees into Employee table of azure SQL , with incremental ID based on maximum id of the Table along with department name and location .

● Sink1 : write max(id) from Employee table (cache sink)

● Sink2 : write all rows of department.txt into Cache Sink. and Configure dno as Key Column of Lookup

● Read data from new employees file and generate next employee id with help of surrogate key and sink1 output.

● Generate lookup columns department name, location from Sink2 and Load into Target Employee table Azure SQL.

● How two Different DataFlows exchanging Values – Part 1.

● Scope of Cache Sink of a DataFlow.

● What is Variable

● What is Difference between Parameter and Variables

● How to Create a Variable in Pipeline

● Variable Data Types:

-> String

-> Boolean

-> Array

● Create a DataFlow with Following Transformation Sequence to find Average of Column

1. Source

2. Aggregate (for finding Average)

3. Sink Cache (to write Average of column)

● How to pass Cache Sink output to Activity Output. How to Enable this feature ● Create a Pipeline to Call this Data Flow which writes into Sink Cache ● How to Understand Output of DataFlow Activity which writes in Sink Cache and Writes as Activity Output

● Fields of DataFlow Activity output.

1. runStatus

2. Output

3. Sink

4. value as Array

● “Set Variable” Activity

● How to Assign Value to Variable using “Set Variable” Activity.

● How two Different DataFlows exchanging Values – Part 2

● How to Assign DataFlow Sink Cache Output to Variable .

● Dynamic Expression to Assign Value to Variable Using “Set Variable” Activity.

●@activity(‘dataflow’).output

●runStatus.output.sink.value[].field

● Understand Output of “Set Variable” Activity.

● How two Different DataFlows exchanging Values – Part 3

● DataFlow Parameters

● How to create Parameter for DataFlow

● Fixing Default Value for DataFlow Parameter

● How to access DataFlow Parameter

● $<parameter_name>

● Accessing variable value from pipeline into DataFlow Parameter

● @variables(‘<variable_name>’)

● Dynamic expression to access variable value in DataFlow Parameter

● From above 3 sessions, you will be learning how to pass output of cache sink output to other dataflows .

● Categorical Distribution of Data into Multiple Files (foreach data group one separate file Generation ). → Part 1

● Scenario: There is department name column with “Marketing”, “Hr”, “Finance” values. But these values are duplicated. All rows related with these 3 categories . We need to distribute data dynamically into 3 categories.

● Example: all rows related with marketing department into “marketing.txt” file.

● Find Unique Values in Categorical Column and Eliminate Duplicate Values

● Convert Unique Column into Array of String

● collect() function

● Aggregate Transformation using Collect() function

● Write Array of String into Sink Cache

● Enable Cache to “Write to Activity Output”

● Create a pipeline, to Call this Dataflow.

● Create a variable in pipeline with Array Data type

● Access DataFlow Cache Array into Array Variable with “Set Variable” activity Using “Dynamic Expressions”.

● Categorical Distribution of Data into Multiple Files (foreach data group one separate file Generation ). → Part 2

● Array variable to Foreach

● Configuring input (value of Array Variable) for Foreach Activity

● DataFlow sub Activity under Foreach.

● Passing Foreach Current item to Dataflow parameter

● Apply Filter with DataFlow Parameter.

● DataFlow Parameter as file name in Sink Transformation,

● SCD (Slowly Changing Dimensions ) Type 2 – Part 1

● Difference between SCD Type 1 and SCD Type 2

● What is Delta of Source data

● How to Capture Delta (Solution : Incremental loading)

● Behavior of SCD Type 1

● If Record of Delta existed in Target, what happens for SCD Type1

● If Record of Delta does not exists in Target, What happens for SCD Type 1

● How to capture Deleted records from source into Delta.

● Behavior of SCD Type 2

● What should happen for Delta of Source in Target

● What should happen for old records of Target when Delta is inserted in Target

● Importance of Surrogate Key in Target Key table.

● What is version of a record

● How to recognize a record is active or inactive in Target Table

● Importance of Active Status Column in Target table to implement SCD Type 2

● What additional columns are required for Target table, to implement SCD Type 2

● Preparing data objects and data for SCD Type 2

● Create target table @ Azure sql or Synapse

● What is identity column is azure sql

● Identity column as surrogate key in target table

● Possible values for active status column in target table

● Preparing data lake file for delta

Type 2 – Part 2

Type 2 – Part 3

Type 3 – Part 1

Type 3 – Part 2

Type 4 – Part 1

Type 4 – Part 2

Type 6 – part 1

Type 6 – Part2

Type 6 – Part 3

Key Features of Azure Data Factory Training in Bangalore

- D Turtle Institute is a reputed training institute in Bangalore that provides training in various technologies including Azure Data Factory.

- The trainers at D Turtle Institute are industry experts with years of experience in designing and implementing data integration solutions using Azure Data Factory.

- The training methodology is a blend of theory and hands-on exercises, where you will get to work on real-life use cases to gain practical experience.

- The course covers all the key features of Azure Data Factory, including data pipelines, data transformation, and data movement.

- You will learn how to use various data sources and destinations, such as Azure SQL Database, Azure Blob Storage, and Azure Data Lake Storage.

- The course also covers how to integrate Azure Data Factory with other Azure services, such as Azure Databricks and Azure Synapse Analytics.

- You will learn how to monitor and manage data pipelines using Azure Data Factory monitoring tools

- Upon completing the course, you will receive a certification from D Turtle Institute, which will validate your skills and knowledge in Azure Data Factory.

Why Choose us for Azure Data Factory Course?

Expert Trainers

Expert Trainers

The trainers at D Turtle Institute are experienced industry experts with in-depth knowledge of Azure Data Factory and data integration technologies.

Comprehensive Course Material

Comprehensive Course Material

The institute provides comprehensive course material that covers all the essential topics related to Azure Data Factory.

Hands-on Experience

Hands-on Experience

The course is designed to provide you with hands-on experience with Azure Data Factory through real-world use cases and projects.

Flexibility

Flexibility

D Turtle Institute offers flexible training schedules and modes, such as online, classroom, and self-paced learning.

Customizable Course Curriculum

Customizable Course Curriculum

Our instructors customize the course curriculum to meet your specific learning requirements and goals.

Certification

Certification

Upon completing the course, you will receive a certification from D Turtle Institute, which will add value to your resume and career.

Industry Networking

Industry Networking

D Turtle Institute offers networking opportunities with industry professionals and experts, which can help you expand your knowledge and career prospects.

Affordable Pricing

Affordable Pricing

The course fees at D Turtle Institute are affordable and competitive compared to other training institutes.

Compact Batch

Compact Batch

We take only 10 students in each of our batches to make sure each of them get individual attention from the trainers.

Benefits of Azure Data Factory

Scalability

Azure Data Factory is a scalable service that can handle large amounts of data processing and integration, making it an ideal choice for organizations with growing data needs.

Integration with Azure Services

Azure Data Factory seamlessly integrates with other Azure services, such as Azure Synapse Analytics and Azure Databricks, allowing for a unified data integration and analysis environment.

Cost-Effective

Azure Data Factory is a cost-effective solution that enables organizations to manage data integration and processing without the need for expensive hardware and infrastructure.

Automation

Azure Data Factory enables organizations to automate data integration and processing workflows, reducing manual effort and improving efficiency.

Security

Azure Data Factory provides robust security features that ensure the confidentiality, integrity, and availability of data, making it a trusted choice for sensitive data processing.

Real-time Data Integration

Azure Data Factory supports real-time data integration, enabling organizations to get insights from data in near real-time, which can help them make informed decisions and respond quickly to changing business needs.

Services

Azure Data Factory Training Offline

Azure Data Factory Training Offline

D Turtle Institute provides Azure Data Factory Training in Bangalore classroom-based training where students can learn in a traditional face-to-face setting with trainers.

Azure Data Factory Training Online

Azure Data Factory Training Online

D Turtle Institute offers Azure Data Factory Training Online courses that enable students to learn from anywhere at their own pace using a computer, laptop, or mobile device.

Azure Data Factory Corporate Training

Corporate Training

D Turtle Institute offers corporate training programs for organizations that want to train their employees in new technologies, such as Azure Data Factory.

Placement Program

Placement Program

D Turtle Institute provides placement assistance and guidance to students, which helps them land their dream job in the field of data integration and Azure Data Factory.

Project Training

Project Training

The ultimate goal of our project training is to ensure that individuals have the necessary tools and techniques to effectively plan, execute, and deliver successful projects within the constraints of time, scope, and budget

Azure Data Factory Training Videos Pre-Recorded

Azure Data Factory Training Videos Pre-Recorded

D Turtle Institute provides pre-recorded video courses that enable students to learn at their own pace, anytime and anywhere.

Our Students Feedback

About Azure Data Factory Training in Bangalore

Azure Data Factory is a cloud-based data integration service that allows organizations to create, schedule, and manage data pipelines that move data from various sources to target destinations. With Azure Data Factory, organizations can connect to a wide range of data sources, such as structured, unstructured, and semi-structured data, both on-premises and in the cloud.

Azure Data Factory provides a code-free and easy-to-use interface for designing and deploying data pipelines, which can help organizations save time and money. With Azure Data Factory, organizations can also automate their data processing and integration workflows, reducing manual effort and improving efficiency.

The Azure Data Factory Training in Bangalore at D Turtle Institute provides students with comprehensive knowledge and hands-on experience with the cloud-based data integration tool.

Our course curriculum covers all the essential features and functionalities of Azure Data Factory, and the trainers are experienced and knowledgeable.

Overall, the Azure Data Factory Training in Bangalore at D Turtle Institute is a valuable investment for anyone looking to enhance their skills in data integration and work with one of the most powerful cloud-based data integration services.

Enroll in our Azure Data Factory Training in Bangalore and get comprehensive training to increase your skill set to start a lucrative career.

Our Accomplishments

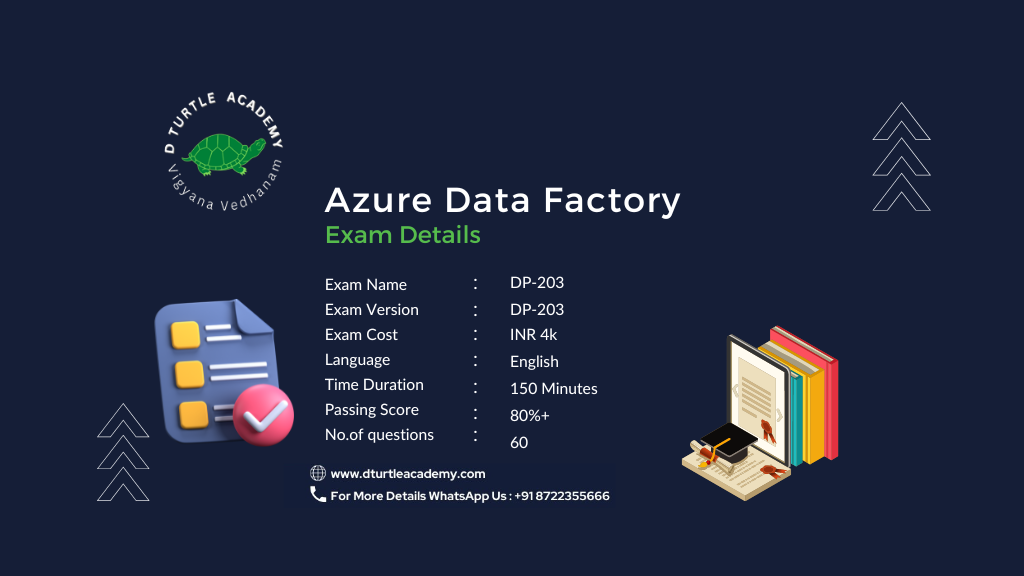

Azure Data Factory Course & Certification

Azure Data Factory Certifications are one of the most sought-after certifications in the IT industry.

The certification is proof of your expertise and knowledge of Data Factory, and it can help you land a new job or even get a promotion.

The Azure Data Engineer Associate certification validates the candidate’s ability to design and implement data solutions using Azure services, including Azure Data Factory.

- Data Engineering on Microsoft Azure

Skills Developed Post Azure Data Factory Training in Bangalore

- Ability to design and implement data integration workflows using Azure Data Factory

- Knowledge of working with various data sources, including structured, unstructured, and semi-structured data

- Proficiency in transforming, processing, and loading data into various target destinations, such as Azure storage services and cloud-based data warehouses

- Ability to monitor and troubleshoot data pipelines to ensure data quality and availability

- Understanding of how to automate data processing and integration workflows to improve efficiency and reduce manual effort

- Familiarity with other Azure services that integrate with Azure Data Factory, such as Azure Synapse Analytics and Azure Databricks, and their role in data analysis and processing.

Prerequisites for Azure Data Factory Course

- Basic knowledge of SQL and relational databases

- Understanding of data integration concepts and techniques

- Familiarity with ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes

- Basic knowledge of cloud computing and cloud-based services

- Familiarity with Microsoft Azure and its services

- Knowledge of programming languages, such as C# or Python, is helpful but not required.

- Basic understanding of data warehousing and big data technologies is a plus, but not mandatory.

FAQs

Yes, it’s fast and easy to build code-free or code-centric ETL and ELT processes using Azure data factory.

As part of the Azure cloud platform Azure Data Factory is a cloud-based data integration service provided by Microsoft. It allows you to create, schedule, and manage data integration and transformation pipelines that move and transform data from various sources to different destinations.

D Turtle Academy provides different modes of training in Azure Data Factory such as – Online training, classroom training and self paced video learning. You can enroll in any modes of training to learn the Azure Data Factory from a basic to advanced level.

The Azure Data Factory training at D Turtle Institute covers topics such as data ingestion, transformation, data output, and monitoring and troubleshooting data pipelines using Azure Data Factory.

The trainers at D Turtle Institute are experienced and knowledgeable professionals with expertise in data integration and cloud computing.

The duration of the Azure Data Factory training at D Turtle Institute varies based on the type of training program, but typically ranges from 1-2 months.

Yes, D Turtle Institute offers online training programs in addition to classroom and corporate training programs.

Students are assessed through practical projects and assignments that enable them to gain real-world experience with Azure Data Factory.

Yes, D Turtle Institute provides placement assistance to help students find suitable job opportunities after completing the training program.

To know detailed information about the cost of the course, contact us or send us an email. Our team will get in touch with you as soon as possible.

Azure Data Factory certification validates the candidate’s skills and knowledge in data integration and processing using Azure Data Factory, which can enhance their career prospects and enable them to take on more challenging roles in the field of data engineering.